The test manager should not forget the final goal of the PRA in this activity, i.e. that it is known:

-

what must be tested (object parts)

-

what must be examined (characteristics)

-

the required thoroughness of testing (risk classes).

To achieve these results, this activity is split up into a number of sub-activities described below:

-

1. Determining the test goals

-

2. Determining the relevant characteristics per test goal

-

3. Distinguishing object parts per characteristic

-

4. For each characteristic, filling out the risk table for test goals and object parts. This step consists of the

following sub-steps:

-

a) Determining the damage per test goal

-

b) Determining the chance of failure per object part

-

c) Determining the risk class per test goal/object part

-

d) Determining the risk class per object part

-

5. Aggregating characteristics/object parts/risk classes in total overview

The steps above may give rise to the impression that this is a huge and complex activity. But it is not so bad. The

steps have been kept as elementary as possible to facilitate understanding. Depending on the experience of the test

manager and participants, some steps can be completed very quickly or may even be combined. However, it remains

important to realise that communication between the participants represents the greatest challenge.

1) Determining the test goals

The test manager wishes to achieve a risk assessment for object parts and characteristics. To this end, he needs input

from the various participants in the PRA. In practice, however, talking about object parts and characteristics is often

found to be too technical for the participants. As such, the test manager must start the PRA from the perception of the

client and other acceptants, i.e. from the perspective of what they believe to be important. These are called the test

goals.

In the first place, therefore, the test goals of the client and other acceptants are collected and established in a PRA

insofar as this was not done during the "Understanding the assignment". The table below contains a few examples for

various types of test goals.

|

Type of test goal

|

Examples

|

|

Business processes

|

The invoicing and order handling

processes continue to function correctly with support from the new/changed system.

|

|

Subsystems

|

Correctly functioning subsystems

Customer Management,

Orders, but sometimes also at the

function or screen level: adding customer, editing customer.

|

|

Product risks

|

1) Customer receives incorrect

invoice,

2) Users cannot work with the

package.

|

|

Acceptance criteria

|

Interface between the new Customer

System and the existing Order System functions correctly.

|

|

User requirements

|

An automated check is used to

determine credit-worthiness of the applicant.

|

|

Use cases

|

Entering new customer, open

account.

|

|

Critical Success Factors

|

Online mortgage offer must appear on

the intermediary's screen within 1 minute.

|

|

Quality characteristics

|

Functionality, performance,

user-friendliness, suitability

|

Table 1: Examples of test goals

The type of test goals chosen depends on the organisation or project. IT - focused organisations or projects often opt

for use cases, user requirements, but also directly for subsystems or quality characteristics. Organisations or

projects with a lot of user involvement find it easier to talk about business processes, individual product risks, or

critical success factors. In maintenance, for instance, an important test goal is often that the existing functionality

continues to operate correctly. Processes are often chosen as test goals for package implementations. It is a matter of

selecting the test goals that make sense to the participants.

The test manager must prevent that too many test goals are defi ned. A general rule of thumb is 3 to 20. When more test

goals are defined – which can happen in particular if the participants choose specific product risks to be covered as

test goals – it must be determined whether these separate risks can be allocated to a limited number of groups.

The definition of test goals is an essential step with which the test manager can take the participants by the hand, as

it were, to move through the next steps. They may also be able to lay the bridge to the stakeholders (from the

business) later on because it enables targeted reporting.

2) Determining the relevant characteristics per test goal

The participants then determine the characteristics relevant for testing for each test goal. In other words, what

aspects should the test work cover to be able to realise the test goals?

Organisations generally choose to use the quality characteristics. If the quality characteristics are difficult to

understand for the participants, the test manager can also use test types, e.g. installability, multi-user, regression,

etc. An installation test simply means more to some participants than the quality characteristic "continuity", of which

it is a part. http://www.tmap.net/ contains the "Overview of applied

test types" that lists the various test types.

In most cases, the characteristic "functionality" is a relevant one. The test manager must make clear that other

characteristics, such as performance, user-friendliness and security, can also be relevant for testing. But there are

also characteristics that require no testing, for instance because they do not represent a risk or because they are

already covered by another test.

|

Type of test goal

|

Examples

|

Examples of characteristics

|

|

Business processes

|

Invoicing, orders

|

Various: functionality,

user-friendliness, performance, etc.

|

|

Subsystems

|

Subsystem Customer Management, Orders,

but sometimes also at the function

level: adding customer, editing

customer

|

Various: functionality,

user-friendliness, performance, etc.

|

|

Product risks

|

1) Customer receives incorrect

invoice

2) Users cannot work with the

package

|

1) Functionality

2) User-friendliness,

suitability

|

|

Acceptance criteria

|

Interface between the new Customer

System and the existing Order System functions correctly

|

Functionality, perhaps

performance and security

|

|

User requirements

|

BKR check used to determine

credit-worthiness of the applicant

|

Functionality

|

|

Use cases

|

Entering new customer, open

account

|

Various: functionality,

user-friendliness, performance, etc.

|

|

Critical Success Factors

|

Online mortgage offer must appear on

the intermediary's screen within 1 minute.

|

Performance

|

|

Quality characteristics

|

Functionality, performance, user

friendliness, suitability

|

1-on-1

|

Table 2: Examples of characteristics per test goal

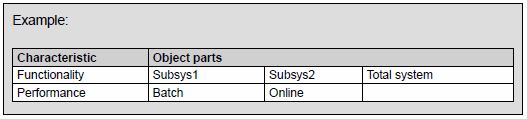

3) Distinguishing object parts per characteristic

After selecting the characteristics relevant for testing for each test goal, the characteristics are collected. The

participants then split up the test object into a number of object parts for each characteristic.

The division into object parts makes it possible to realise more refinement later in selecting the test coverage. It is

important to note that the division into object parts depends on the characteristic. For instance, the characteristic

"functionality" will have a different division into object parts than "performance" or "security". In practice, the

division into functional parts (subsystems) is most essential since this will take up most test time later. We

recommend ensuring that the division coincides with the design structure unless there are good reasons to deviate from

this.

From the functional point of view, the system can be split up into 2 big subsystems that can be tested separately.

Furthermore an integral test of the total system is also necessary. This means a division into three object parts. The

characteristic Performance distinguishes between the online and batch performance, i.e. what is the direct response to

a user action and what is the time required to execute batch processes.

The division into characteristics/object parts also makes it possible to distinguish strongly deviating risk parts

early on in the test process. Such a part may then be defined as a separate object part, for instance. This enables

diverging approaches for testing different components.

For a PRA as part of the master test plan, this division can sometimes not be made for many characteristics because the

necessary information is lacking. In this case the PRA goes down to the level of characteristics and does not make a

division into object parts. That is done later when the test plans are created for the separate test levels.

There is no fixed guideline for the number of object parts in a test object. A division in up to 7 object parts is

still manageable, but practice also shows situations with dozens of object parts where each function in the system is

treated as an object part. While it is a lot of work, the advantage is that a highly detailed risk analysis is

performed and that hours and techniques can be allocated per function very quickly at a later stage.

4) For each characteristic, filling out the risk table for test goals and object parts

The test goals are plotted against the object parts (functional subsystems, online/batch performance, etc.) and the

participants determine the risk classification for each characteristic. This is done in the sub-steps described below.

In the examples below, an absolute classification is used here:

|

Chance of failure

|

|

High

|

Medium

|

Low

|

|

Damage in case of failure

|

High

|

A

|

B

|

B

|

|

Medium

|

B

|

B

|

C

|

|

Low

|

C

|

C

|

C

|

a) Damage per test goal

The participants first estimate per test goal what the damage will be if the characteristic is inadequate.

This requires a user contribution in particular. The example below uses the business processes requiring support as test

goals.

|

Characteristic:

Functionality

|

|

|

Test goals

|

Damage

|

|

Business process 1

|

H

|

|

Business process 2

|

H

|

|

Business process 3

|

L

|

b) Chance of failure per object part

They then estimate the chance of failure for each object part. This estimate requires more input from the technical

architecture and the development process.

|

Functionality

|

Subsys1

|

Subsys2

|

Total system

|

|

Chance of failure

|

H

|

M

|

L

|

c) Risk class per test goal/object part

At the junctions of test goals and object parts, the participants then indicate whether the combination is relevant by

assigning a risk class. The risk class is derived from the damage per test goal and the chance of failure per object part.

The participiants can deviate from the general formula. One reason might be that they consider the actual risk to be much

higher or smaller than the risk class that follows from the formula chance of failure x damage. If there is a deviation, a

comment should always be provided.

|

Characteristic:

Functionality

|

Object parts

|

Subsys1

|

Subsys2

|

Total system

|

|

|

Chance of failure

|

H

|

M

|

L

|

|

Test goal

|

Damage

|

|

|

|

|

Business process 1

|

H

|

A

|

- *

|

-

|

|

Business process 2

|

H

|

A

|

A’ **

|

C’

|

|

Business process 3

|

L

|

C

|

C

|

C

|

* A minus-sign indicates the combination is not relevant for testing

** A notation like ’ (or capital or bold) can be used to indicate that there are some special comments on the

junction. For instance that it involves a small part of the subsystem or that a different risk is associated with it than

would be concluded on the basis of chance of failure/subsystem and damage/business process.

d) Determine risk class per object part

The result that must be recorded in the risk table is a risk class per object part (of a characteristic). Based on this, a

choice can be made in the test strategy to test the characteristic/object part combination with light, medium or heavy

thoroughness. The risk class is actually determined by the risk classes at the junctions of the object part and the test

goals in the previous step c. An agreement must be reached about how the risk classes of the separate junctions together

determine the risk class of the object part.

The risk class of the object part was determined, in the example below, by using the following agreement: the risk class of

the object part as a whole is equal to the risk class of the junctions that occurs most often. If risk class A and C are

both most common, the object part will be given risk class B. If risk classes A and B or B and C occur most commonly, the

highest risk class is selected.

|

Characteristic:

Functionality

|

Object parts

|

Subsys1

|

Subsys2

|

Total system

|

|

|

Chance of failure

|

H

|

M

|

L

|

|

Test goal

|

Damage

|

|

|

|

|

Business process 1

|

H

|

A

|

-

|

-

|

|

Business process 2

|

H

|

A

|

A’

|

C’

|

|

Business process 3

|

L

|

C

|

C

|

C

|

|

Risk Class =>

|

|

A

|

B

|

C

|

Below you will find an example for the performance characteristic.

|

Characteristic:

Performance

|

Object parts

|

Online

|

Batch

|

|

|

Chance of failure

|

M

|

L

|

|

Test goal

|

Damage

|

|

|

|

Business process 1

|

H

|

B

|

-

|

|

Business process 2

|

M*

|

B

|

C

|

|

Business process 3

|

M

|

-

|

C

|

|

Risk Class =>

|

|

B

|

C

|

* The damage deviates from the table above. This is because the damage in the event of functionality that does not

function correctly is valued as greater than the damage for inadequate performance.

These risk tables establish the relationship between e.g. test goals like business processes, user requirements or

separate product risks with the characteristics and object parts, so that the test manager can report at the test goal

level in subsequent reports. For this reason the tables must be managed, even after the test plan is created, and

therefore throughout the test process.

Balanced risk estimation

A risk class is allocated to a characteristic/object part. The risk class represents the risk (damage x change of

failure) as estimated on the basis of test goals, characteristics and object parts. However, the test manager must

prevent that each characteristic object part is assigned the highest risk class (A). The participants/stakeholders have

a natural tendency to assess the risks as high and therefore select A. Tips to prevent this are:

-

The starting point is that all damage and chance of failure indications start at “Medium”; the participants must

then "demonstrate” per indication why the damage or chance of failure is higher than average for this aspect.

-

Do not compare the characteristics against each other (“functionality is more high risk than performance"), because

a characteristic like functionality would then always be assigned an A.

-

Discuss the implications of a specifi c choice for the test effort: give a quick indication of what a thorough,

respectively a light test of a specific characteristic would cost, in terms of time and money.

Test process risks

Often, various other types of risks – such as process and (test) project risks – are listed in a PRA in addition to the

product risks. The test manager must ensure that these are recorded and submitted to the authorised party or person,

such as the project manager. Typical test process risks are included in a separate section in the (master) test

plan.

Communicate in the language of the participants.

The test manager must ensure that communication occurs as much as possible in the terminology of the stakeholders. Put

very simply, the following questions can often be asked: “What if this [test goal/characteristic/object part] doesn’t

work? And what would be the consequences?” A related tip is that the participants must continue to probe until it is

about time, money, customers or image. This means talking as little as possible about product properties (“Consequence:

invoicing program needs 4 hours instead of 2”) but about what it means for the organisation (“Customer receives invoice

after 2 days instead of the next day”).

New requirements

Experience shows that new requirements are “discovered” in a PRA fairly often. The test manager must collect these and

feed them back to the project, the person responsible for the requirements, or the change control board.

5) Aggregating characteristics/object parts/risk classes in total overview

The PRA total overview is created on the basis of the separate risk tables, see below. This step is an administrative

exercise and requires no input from the participants. The advantage of this step is that the participants, test manager

and client achieve a total overview. The risk tables are optionally included in the (master) test plan as an annex and,

in any case, managed to enable subsequent reporting on the test goals. The table below is an example of a total

overview. The argumentation column summarises the reasons, related to the test goals, for assigning the

relevant risk class to the characteristic or object part.

|

Characteristic:

Functionality

|

Risk

class

|

Argumentation

|

|

- subsys 1

|

A

|

high chance of failure, used in vital

processes 1 and 2

|

|

- subsys 2

|

B

|

medium chance of failure, used only to

limited extent in vital process 2

|

|

- total

|

C

|

if subsys 1 and 2 function correctly,

the risk of integration problems is low.

|

|

User-friendliness

|

B

|

…

|

|

Performance

|

|

|

|

- online

|

B

|

|

|

- batch

|

C

|

|

|

Security

|

A

|

|

|

Suitability

|

B

|

|

In case of inadequate detail

If inadequate details are available on e.g. the object parts at the time of creating the master test plan,

only the characteristics remain in the first column (see the example below).

|

Characteristic

|

RC

|

Argumentation

|

|

Functionality

|

B

|

…

|

|

User-friendliness

|

B

|

|

|

Performance

|

B

|

|

|

Security

|

A

|

|

|

Suitability

|

B

|

|

|

…

|

|

|

The detailing required to establish a test strategy must then be elaborated further during the PRAs of the individual test

plans. When in the example above, functionality and performance are allocated to the system test on the basis of the master

test plan, the PRA for this could be detailed as follows.

Example of system test:

|

Characteristic

|

RC MTP

|

Risk class

|

Argumentation

|

|

Functionality

|

B

|

|

…

|

|

- Subsys1

|

|

A

|

|

|

- Subsys2

|

|

B

|

|

|

- Totalsys

|

|

C

|

|

|

Performance

|

B

|

|

|

|

- online

|

|

B

|

|

|

- batch

|

|

C

|

|

RC MTP = Risk class assigned to the characteristic in the master test plan

A = High risk class

B = Medium risk class

C = Low risk class

Product risks during maintenance

Since there is a (test) difference between new build and maintenance, this has consequences for the steps of the risk

analysis to be executed. The main difference between new build and maintenance for the test strategy is the chance of

defects. For the PRA, this means that the risk classification of the characteristics/object parts for maintenance differs

from a new build situation.

Maintenance means that a number of modifications, usually as a result of defect reports or change proposals, are

implemented in an existing system. These modifications may be implemented incorrectly and thus require testing. The

modification also involves a small chance that defects are introduced unintentionally in unchanged parts of the system,

deteriorating the system’s quality. This phenomenon of quality deterioration is called regression and is the reason that

the unchanged parts of the system are also tested. A characteristic/object part that had a high risk during the new build,

may remain unchanged in the maintenance release. Since the chance of regression is then the only risk, much less thorough

testing is required. For this reason, the PRA and strategy determination can be adjusted for maintenance by distinguishing

the changes as object parts. Such a form of PRA and strategy determination is often executed through a kick-off session as

described in 2. Determining The PRA Approach. It is inventoried for each change (an accepted change

proposal or solved problem report) which characteristics are relevant and which parts of the system are changed, and which

part of the system may be affected by the change.

A tip here is that the chance of defects may be different for each new release, but the damage and frequency of use factors

change much less quickly. This information can therefore be reused for the PRA of each subsequent release. However, the

management organisation must keep this information up-to-date. |